Orca

Orca: Empowering human drivers in autonomous futures

You grip the steering wheel, check your rearview mirror, and communicate with other drivers by a simple wave of your hand. You are in an L0 to L2 human driven vehicle. 30 years from now, our research shows that people will begin to gravitate towards L4 and L5 fully autonomous vehicles, otherwise known as the vehicles of the future that require little to no human input – we found that this future is inevitable. Humans will no longer need to watch the road while their fully autonomous vehicle takes them to where they want to be. Our findings indicate that with this future, society will encounter what we call the Chaotic Era, a time in which roughly 50% of street legal vehicles are human driven, while the remaining 50% are some form of fully autonomous. We predict a plethora of communication complications to arise during this transition. Orca is designed to enable human drivers in this future – drivers who prefer to be in full control of their vehicles. Our solution is an augmented reality heads-up display that translates existing human communication behavior, like hand signals, to data perceivable by autonomous vehicles, and transmits it to the network of surrounding vehicles. We believe that Orca will break down the language barrier between man and machine in our inevitable, autonomous future.

Team Members:

Jaein Kim

Ada Chen

Karim Ismail

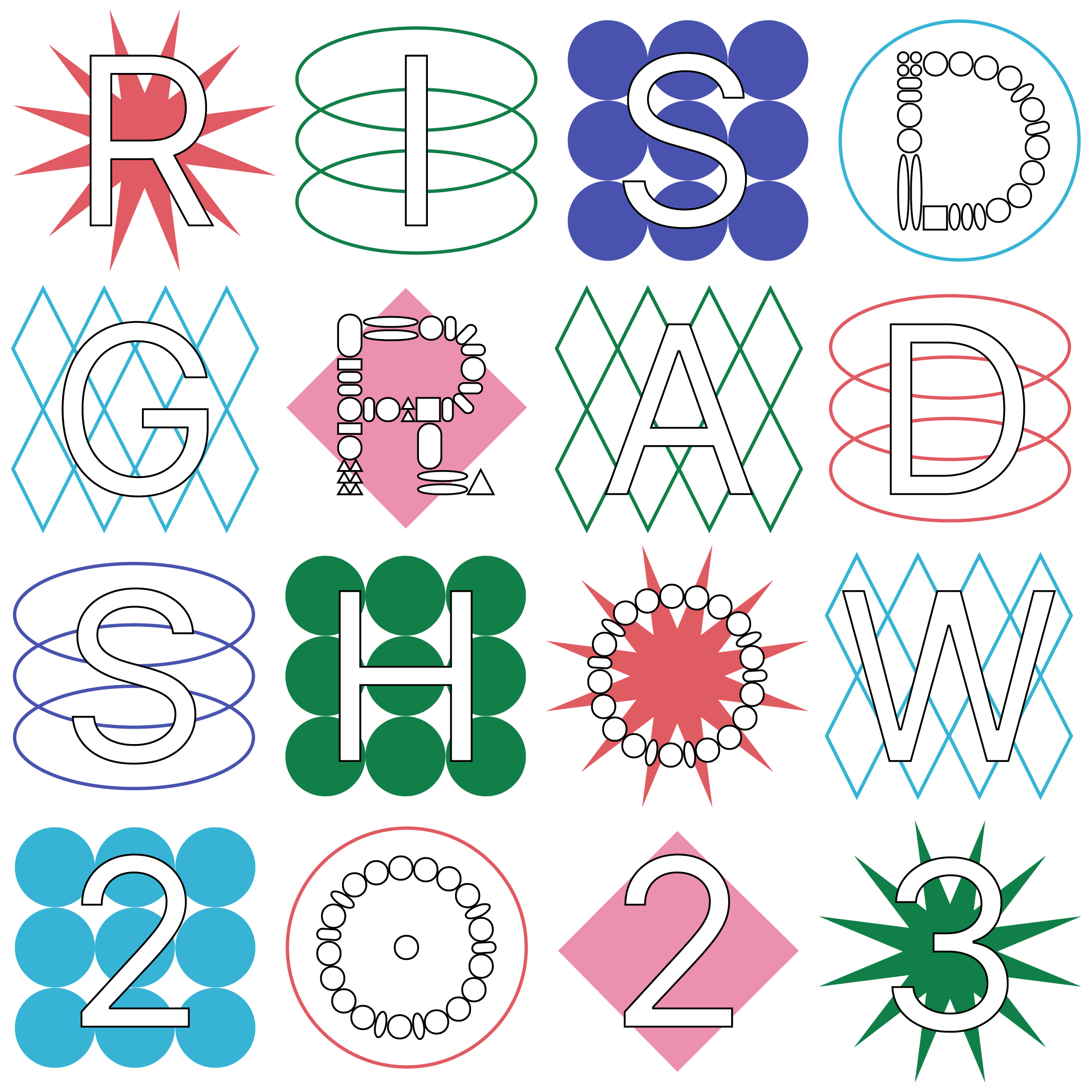

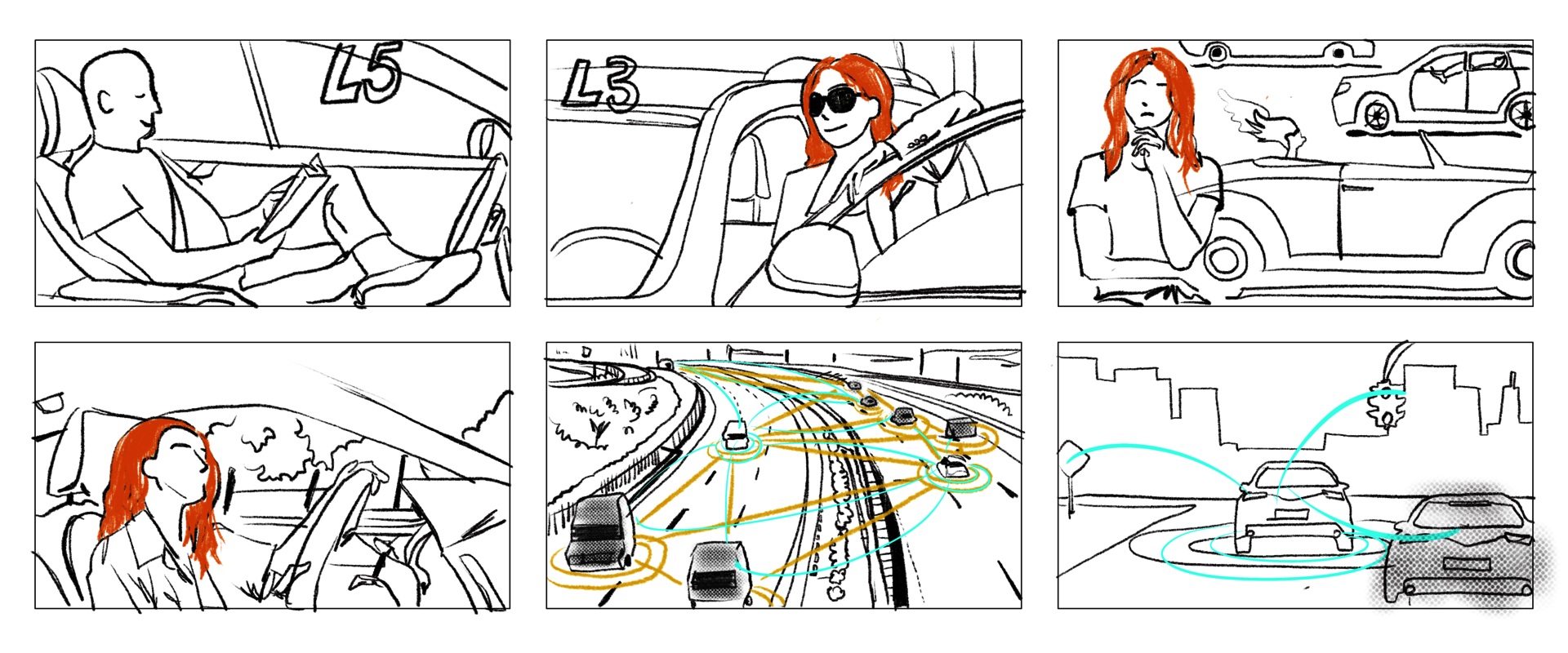

What is the “Chaotic Era”?

When the iPhone was first introduced in 2007, it took time for consumers to completely subscribe to the concept of a touchscreen phone, iPod, and a breakthrough internet communications device replacing their flip phones. This consumer behavior follows the Innovation Adoption Curve. The initial number of users grows gradually due to speculation, where only a few innovators truly see the product or technology’s potential. Following this wave comes the early adopters and early majority, and with this the Chaotic Era. During the Chaotic Era, fully autonomous vehicles will be adopted by roughly 50% of the population, while the remaining 50% might choose to still drive their own vehicles – this may stem from a desire for control, financial status, or persistent skepticism. In the storyboard below we introduce you to Ava, a successful working mom of two who enjoys driving her L2 Volvo – a vehicle that requires full human control. Driving on a road in the Chaotic Era, one that is becoming more saturated with fully autonomous vehicles, Ava is not in-sync with the edge computing network of the L4 and L5, making it impossible for her to communicate with 50% of vehicles or understand their intentions. With Orca, Ava is able to tap into the same network and communicate with surrounding vehicles just as she would if a human driver were behind the wheel.

Image

The Orca placed on an L0 - L2 human driven vehicle’s dashboard, “projecting” onto the windshield. The orange area represents the projection angle. In the bottom left corner, elements of the concept user interface can be seen. (Note: physics of light emission limits projection onto glass and the concept may be hard to perceive, however, by utilizing Lightfield Display technology, projection onto glass can be achieved.)

Image

Image

Image top: Project background and the Innovation adoption curve with our target audience highlighted in orange.

Image bottom: Ava’s storyboard showing her driving a traditional car when the cars around her are fully autonomous.

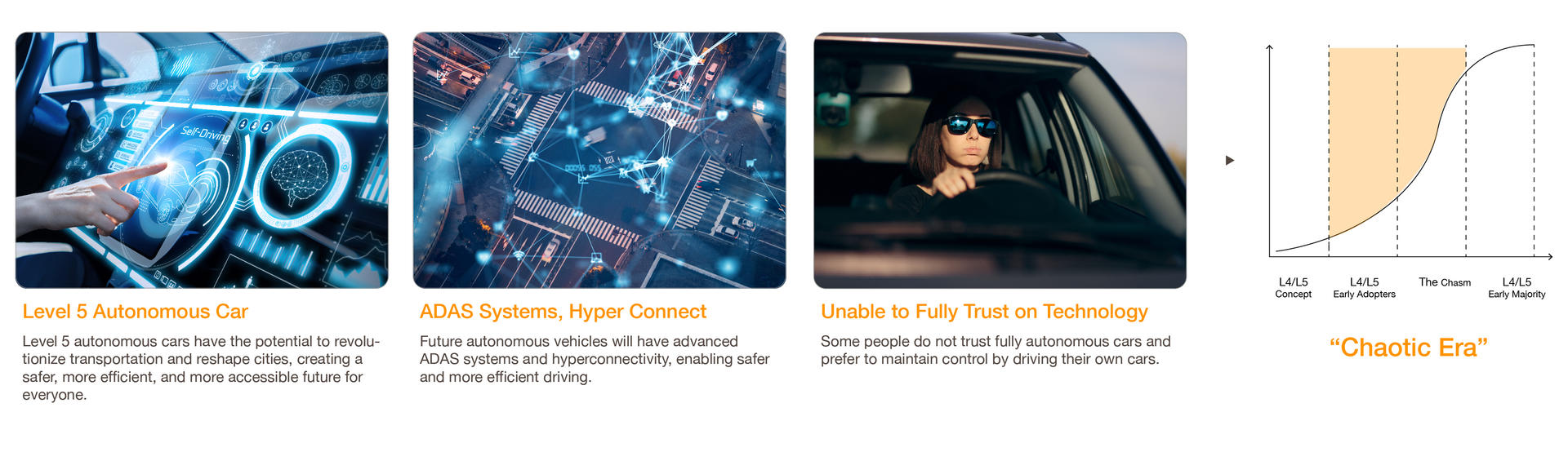

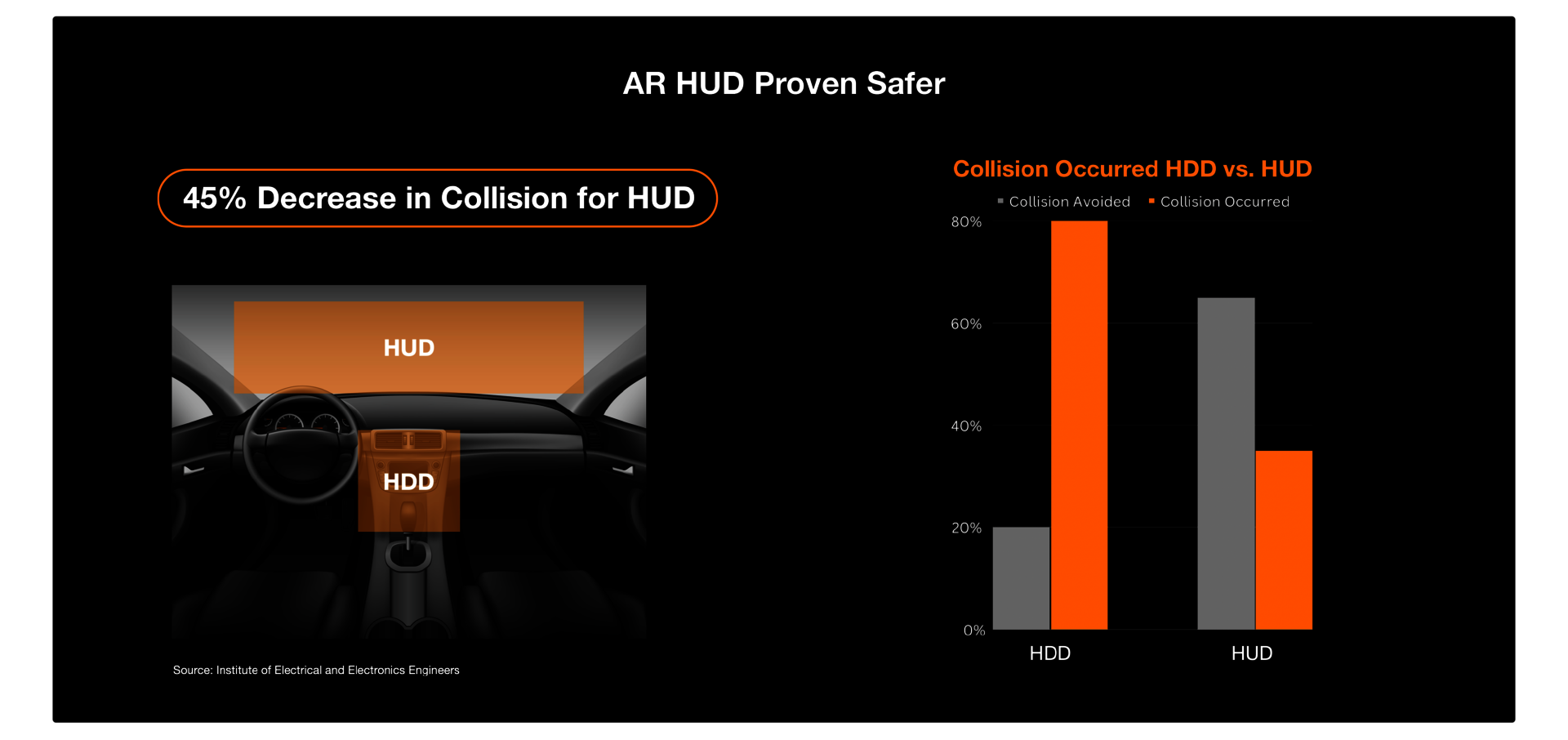

Evidence: why it should be HUD and hand gestures

Heads Up Display technology in human driven vehicles is proven to be 45% safer than heads-down displays, such as those located on our infotainment systems today (where Carplay typically lives). This is what makes the Orca beneficial to drivers starting today. Through primary user research we learned that hand gestures are the main form of communication between drivers on the road. Whether it be at a four-way intersection or when turning onto a different street. Hand gestures can be captured in real time by the camera on the Orca and translated to data that can be perceived by surrounding autonomous vehicles, or other human driven vehicles that are also equipped with an Orca. Using machine learning algorithms, Orca can adapt and learn from a user’s behavior. This means that, although each user may have a different preferred hand gesture correlating to a specific action, the outside world still receives the same communication. With this technology, drivers will no longer need to differentiate between human driven vehicles and the autonomous.

Image

Image

Image top: Diagram and graph of research that shows Head Up Display is 45% safer than traditional Head Down Display.

Image bottom: Hand gestures seen during user testing that are most commonly used.

Solution

The Orca is an Augmented Reality Heads-up display that is portable and smart. It uses cloud computing to communicate with autonomous vehicle communication standards and other Orcas. As more and more fully autonomous vehicles enter the streets the Orca will adopt edge computing technology to send and receive data from autonomous vehicles. Orca levels the playing field and breaks down the language barrier between man and machine. With this, all drivers can feel safe and confident driving the type of vehicle they prefer knowing that they have the power to communicate with all other vehicles, and have all the same, real-time information displayed at a glance.

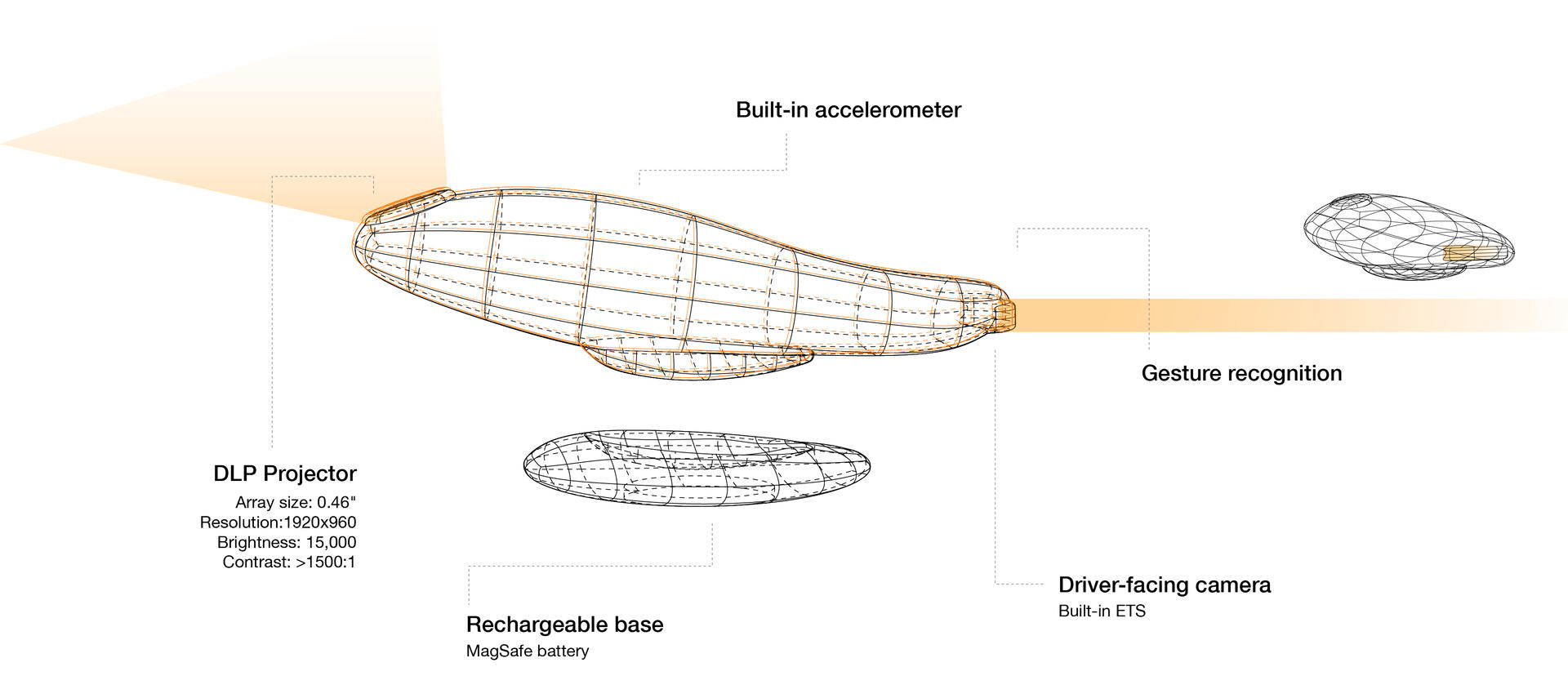

Image

Diagram of the Orca and how it works and the components that make the physical product. Including a chart of the gestures the algorithm is able to recognize.

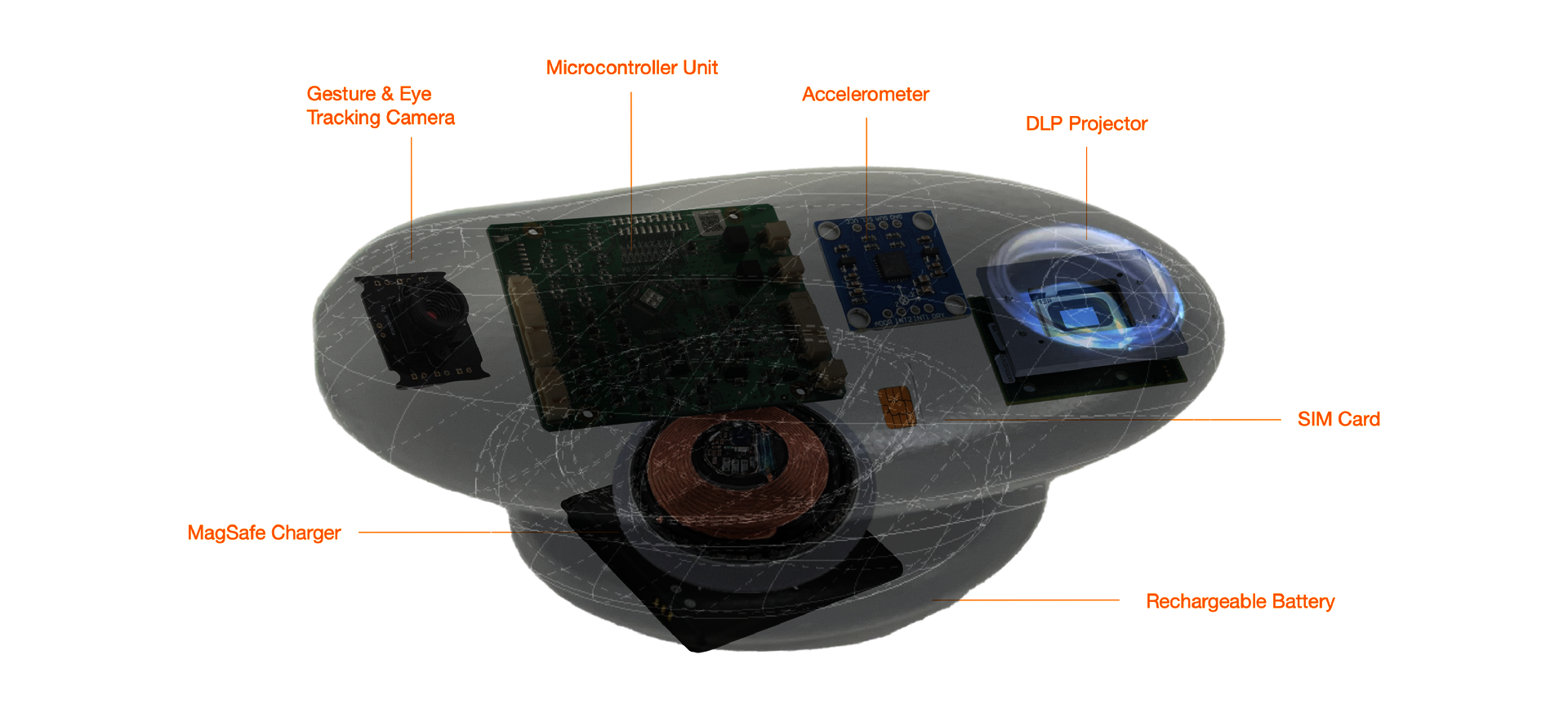

Image

Diagram showing internal equipment parts of Orca. It indicates the required microcontroller unit, accelerometer, gesture & eye tracking camera, etc. for each location.

Image

Videos of the Orca in use: from setting up the Orca in your car to how you interest the UI in three different driving scenarios when facing autonomous vehicles.